Oracle-AMD Strategic Partnership: Transformative AI Supercluster Initiative Analysis

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Related Stocks

Oracle and AMD have announced a groundbreaking expansion of their collaboration to deploy the first publicly available AI supercluster powered by 50,000 AMD Instinct MI450 Series GPUs, with deployment scheduled to begin in calendar Q3 2026 source. This strategic partnership positions Oracle as the first hyperscaler to offer such massive-scale AI computing capabilities using AMD’s next-generation hardware, potentially disrupting the current AI infrastructure landscape dominated by NVIDIA.

The collaboration represents a multi-billion dollar opportunity, with AMD standing to generate “tens of billions” in revenue from the multi-year agreement source, while Oracle gains a significant competitive differentiator in the rapidly expanding AI infrastructure market, which has seen quarterly ARR additions grow from $5.9B in Q1 2022 to $21.4B in Q2 2025 source.

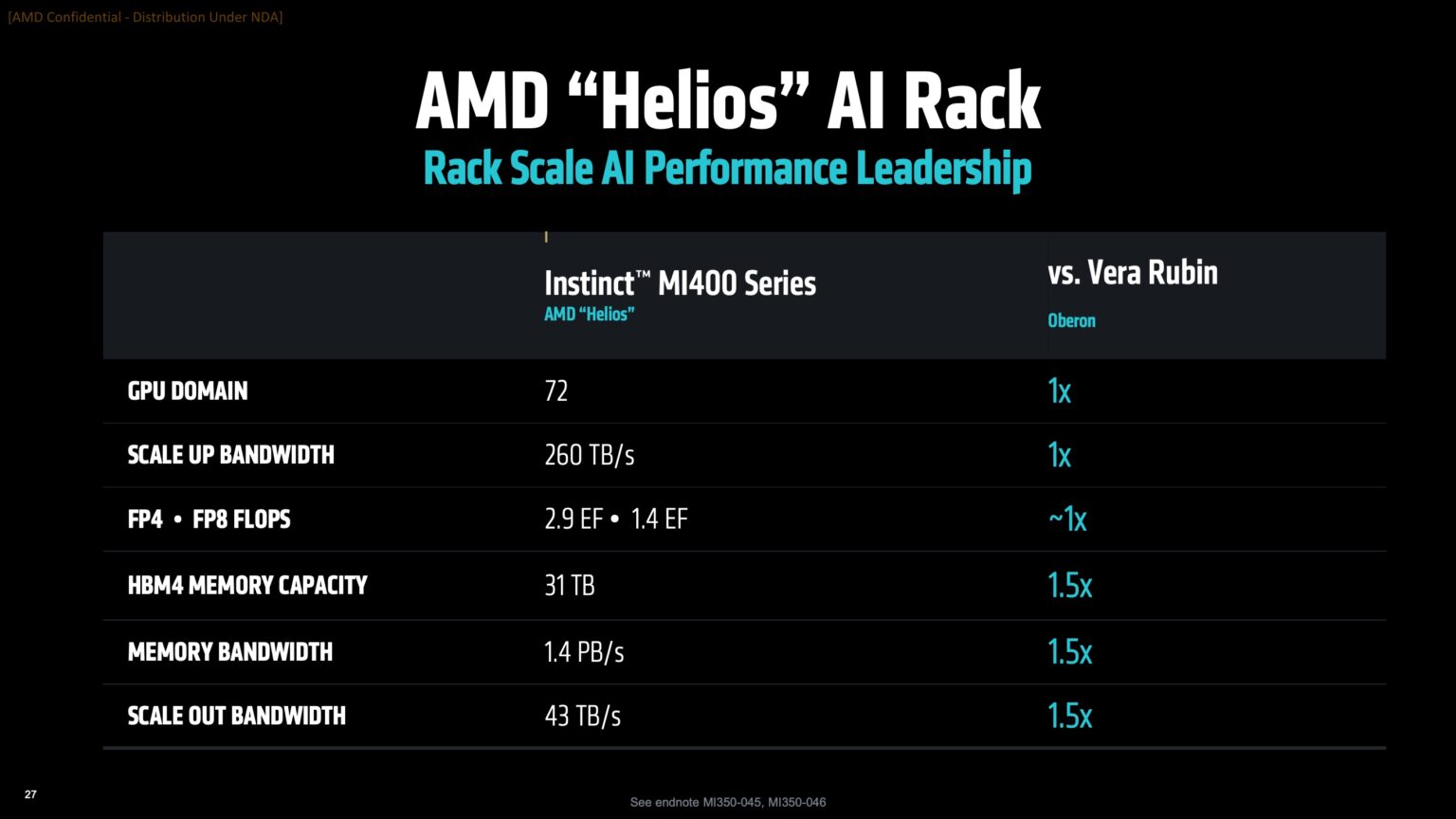

The partnership centers on AMD’s revolutionary “Helios” rack-scale platform, which integrates AMD Instinct MI450 GPUs, Zen 6 EPYC “Venice” CPUs, and advanced “Vulcano” networking hardware source. This comprehensive architecture delivers unprecedented performance:

- Advanced Manufacturing: Built on TSMC’s 2nm process node, representing cutting-edge semiconductor technology source

- Memory Leadership: Up to 432GB of HBM4 memory per GPU with 19.6 TB/s bandwidth, offering 1.5x more memory and bandwidth than competing solutions source

- Scale Performance: Each rack contains 72 MI450 GPUs delivering approximately 1.4 exaFLOPS (FP8) or 2.9 exaFLOPS (FP4) of compute, with 31TB of total HBM4 memory and 1.4 PB/s aggregate bandwidth per rack source

AMD’s Helios platform represents a strategic shift toward open, interoperable AI infrastructure, incorporating standards such as OCP DC-MHS, UALink, and Ultra Ethernet Consortium (UEC) architectures source. This approach directly contrasts with NVIDIA’s more proprietary ecosystem, potentially offering customers greater flexibility and reduced vendor lock-in.

The platform is built on Meta’s Open Rack Wide (ORW) specification, enhancing serviceability and cooling while promoting industry-wide standardization source. The Vulcano networking solution provides 800G Ethernet connectivity with PCIe 6.0 support and Ultra Accelerator Link capabilities, addressing AMD’s historical networking weaknesses compared to NVIDIA’s NVLink technology source.

The AI infrastructure market is experiencing explosive growth, creating significant opportunities for this partnership. Oracle’s current cloud market share of approximately 3% source presents both a challenge and an opportunity – the company needs differentiators to gain market share, and this AMD partnership could serve as that catalyst.

- Enterprise AI developers requiring massive scale for training large language models

- Research institutions needing exascale computing capabilities

- AI startups seeking alternatives to NVIDIA-dominated infrastructure

- Oracle’s existing customer base looking to integrate AI capabilities

- First-mover advantage as the first hyperscaler to offer 50,000+ GPU AMD supercluster

- Open ecosystem reducing vendor lock-in compared to NVIDIA’s proprietary approach

- Memory and bandwidth superiority over competing solutions

- Seamless integration with Oracle’s existing enterprise software portfolio

This partnership could accelerate the industry’s shift toward more open AI infrastructure standards, potentially reducing dependence on NVIDIA’s proprietary ecosystem. The adoption of Meta’s Open Rack Wide specification and other open standards may create ripple effects throughout the data center industry, fostering greater competition and innovation.

The Q3 2026 deployment timeline provides competitors with opportunities to advance their own offerings. However, the scale of the commitment (50,000 GPUs) and the strategic alignment between Oracle and AMD suggest serious intent to challenge the current AI infrastructure hierarchy.

While AMD’s hardware capabilities are impressive, the company’s ROCm software platform still trails NVIDIA’s CUDA in maturity and adoption. This represents a critical risk factor that could impact customer adoption rates, particularly for organizations heavily invested in CUDA-based workflows.

- Execution Risk: Large-scale deployment of next-generation 2nm hardware carries significant technical challenges

- Software Gap: AMD’s ROCm ecosystem needs continued development to match CUDA’s maturity and developer adoption

- Market Competition: The 18-month deployment window gives competitors time to advance their own AI infrastructure offerings

- Economic Uncertainty: Potential AI bubble concerns could impact long-term demand for high-cost AI infrastructure source

- Market Diversification: Customers actively seeking alternatives to NVIDIA’s market dominance

- Cost Leadership: Potentially more competitive pricing than NVIDIA-based solutions due to AMD’s manufacturing advantages

- Enterprise Synergy: Oracle’s existing customer base provides immediate market access and integration opportunities

- Open Standards Leadership: Positioning as champions of open AI infrastructure could attract customers concerned about vendor lock-in

If successfully executed, this partnership could:

- Establish AMD as a credible competitor to NVIDIA in the data center GPU market

- Help Oracle grow its cloud market share beyond the current ~3%

- Accelerate adoption of open standards in AI infrastructure across the industry

- Provide enterprises with genuine choice and flexibility in AI computing resources

The partnership’s ultimate success will depend on flawless execution, rapid software ecosystem development, and market acceptance of AMD’s next-generation hardware. However, the unprecedented scale of the commitment and the strategic alignment between the companies suggest a serious challenge to the current AI infrastructure status quo.

The Oracle-AMD AI supercluster partnership represents a watershed moment in the AI infrastructure landscape. By combining Oracle’s enterprise software expertise and cloud infrastructure with AMD’s cutting-edge GPU technology and open standards approach, this collaboration has the potential to disrupt NVIDIA’s market dominance and provide enterprises with viable alternatives for massive-scale AI computing.

The success of this initiative will be closely watched by the entire technology industry, as it could signal the beginning of a more open, competitive, and innovative era in AI infrastructure. With deployment scheduled for Q3 2026, the coming months will be critical for both companies to demonstrate execution capability and build momentum in this strategically important market.

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.