In-Depth Analysis of Zhipu AI: R&D Investment, GLM Architecture, and High Gross Margin

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Based on the latest information I have collected, I will now conduct a systematic and comprehensive analysis for you.

According to Zhipu AI’s Hong Kong IPO prospectus and publicly disclosed data [1], the company’s financial performance exhibits the typical characteristics of a large model startup: ‘high growth, high investment, high losses’:

| Financial Indicators | 2022 | 2023 | 2024 | H1 2025 |

|---|---|---|---|---|

Revenue (CNH 100 million) |

0.57 | 1.25 | 3.12 | 1.91 |

R&D Expenses (CNH 100 million) |

0.84 | 5.29 | 21.95 | 15.95 |

R&D Expense Ratio |

147% | 425% | 703% |

835% |

Gross Margin |

54.6% | 64.6% | 56.3% | 50.0% |

Net Loss (CNH 100 million) |

1.44 | 7.88 | 29.58 | 23.58 |

As can be seen from the data, the R&D expense ratio reached 703% in 2024, meaning R&D investment was 7 times revenue; this ratio further climbed to 835% in H1 2025 [2]. This ratio is significantly higher than that of international competitors — OpenAI’s R&D expense ratio for the same period was approximately 1.56:1, and Anthropic’s was about 1.04:1 [1].

Zhipu AI’s core technical asset is its independently developed

| Feature | GLM Architecture |

GPT Architecture |

|---|---|---|

Pre-Training Objective |

Autoregressive Blank Filling | Unidirectional Autoregressive Language Modeling |

Context Modeling |

Bidirectional Context Modeling | Pure Forward Generation |

Generation and Comprehension |

Balances generation capabilities and comprehension performance | Focuses on generation capabilities |

Chinese Context |

Stronger language comprehension ability | Relatively weak |

The core innovation of GLM lies in: during the pre-training phase, by ‘masking partial text segments and having the model predict them’, the model can learn both generation capabilities (predicting the masked parts) and comprehension capabilities (understanding contextual semantics) [3]. This design gives the model unique advantages in tasks such as long text processing, code generation, and multi-turn dialogue.

GLM architecture has made systematic innovations in multiple technical dimensions:

- Adopts an improved attention masking strategy to enable more flexible contextual interaction

- Supports bidirectional attention between Part A (known content) and Part B (content to be predicted)

- Replaces traditional sine encoding with rotary position embedding, significantly enhancing long sequence processing capabilities

- Achieves excellent extrapolability— even if the model is trained with limited sequence length, it can effectively process longer inputs [4]

- Supports remote attenuation characteristics, enhancing the model’s ability to capture long-distance dependencies

- Adopts a multi-stage pre-training strategy

- Conducts targeted optimizations for different task types

- Introduces reinforcement learning frameworks (such as the slime framework designed specifically for Agent tasks)

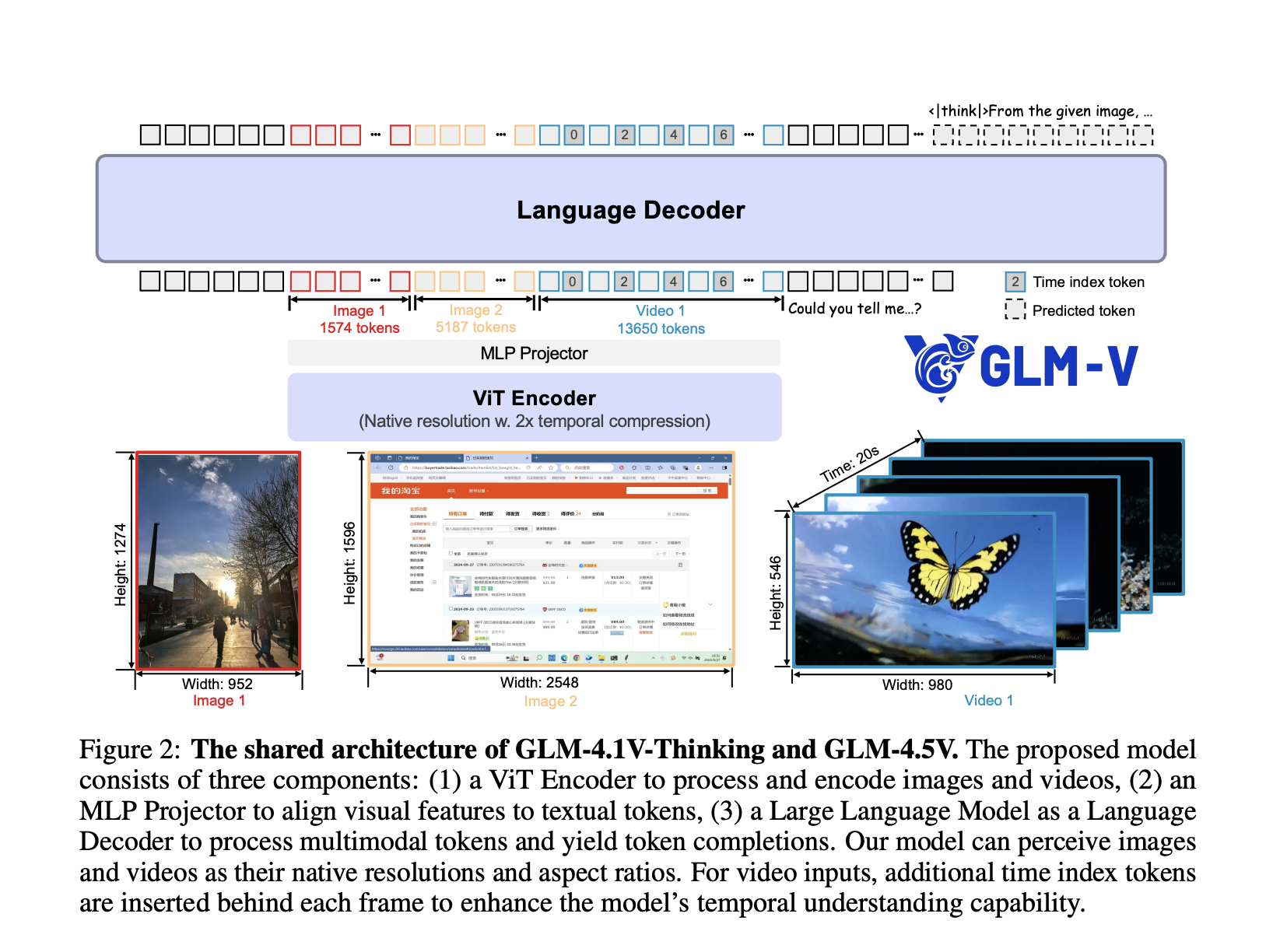

The newly released GLM-4.5 series adopts the

| Parameter Indicators | GLM-4.5 |

|---|---|

| Total Parameters | Approx. 355B (355 Billion) |

| Active Parameters | 32B (32 Billion) |

| Context Length | 128K |

| Overall Ranking | 3rd among 12 mainstream global benchmarks, 1st among domestic models |

| Coding Capability | Surpasses Qwen3-Coder with an 80.8% win rate |

| Tool Call Success Rate | 90.6% |

This architecture achieves a balance of ‘high performance + low resource consumption’, making it particularly suitable for high-concurrency commercial deployment scenarios [5].

The core logic behind Zhipu AI’s maintenance of a gross margin of over 50% is:

Zhipu’s revenue mainly comes from two segments, with significant differences in their gross margins [2]:

| Revenue Segment | 2024 Revenue | Proportion | Gross Margin | Proportion in H1 2025 |

|---|---|---|---|---|

Localized Deployment |

CNH 263.9 million | 84.5% | 59%-68% | 84.8% |

Cloud Deployment (MaaS) |

CNH 48.5 million | 15.5% | 76.1% → 3.4% → -0.4% |

15.2% |

- Localized deploymentcontributes the majority of revenue and gross profit, with a stable gross margin of around 60%, making it a high-margin business

- Cloud deploymentsaw its gross margin plummet from 76.1% to negative territory due to domestic price wars, with the main goal of seizing market share and developer ecosystem

The “generalization” design of the GLM architecture allows the model to quickly adapt to vertical scenarios

- A single general model can serve over 12,000 enterprise customers, covering multiple industries such as the internet, public services, telecommunications, consumer electronics, retail, and media

- Customer repurchase rate exceeds 70%

- Marginal service costs are extremely low, with significant economies of scale

The GLM architecture has completed in-depth adaptation to

- Cambricon (FP8+Int4 hybrid quantization deployment)

- Moore Threads (stable operation with native FP8 precision)

In the current international environment, this capability is strategically significant — it not only ensures supply chain security but also lays the foundation for future cost optimization. Currently, computing power costs account for 71.8% of R&D investment, and domestic chip substitution will be the key to a cost inflection point [2].

The MaaS (Model as a Service) platform has

- Paid API revenue exceeds the sum of all domestic models

- The GLM Coding package (priced at 1/7 of Claude’s) acquired 150,000 paid users in 3 months, with ARR exceeding CNH 100 million

- The platform’s network effect continuously reduces customer acquisition costs

In overseas markets, the company adopts a “high cost-performance ratio + strong capabilities” strategy [7]:

- The GLM Coding package is priced at 1/7of Anthropic Claude’s

- Trades price advantages for market share and developer ecosystem

- Overseas revenue already accounts for 11.6% of total revenue and continues to grow

From the perspective of industry development laws, high R&D investment at the current stage is strategically inevitable:

| Comparison Dimension | Zhipu AI | OpenAI | Anthropic |

|---|---|---|---|

R&D Expense Ratio |

8.4:1 | 1.56:1 | 1.04:1 |

Commercialization Stage |

Early Stage | Mid Stage | Mid Stage |

Strategic Focus |

Technological Catch-Up | Market Share | Technological Leadership |

Zhipu’s management clearly stated: “

The decline in gross margin from the 2023 peak of 64.6% to 50% in H1 2025 is not a sign of operational deterioration, but rather an

- Initial Stage (2023): Focused on high-end privatized deployment, with high gross margin but limited scale

- Transformation Stage (2024-2025): Transforming to MaaS platform and standardized products, sacrificing short-term gross profit for scale

- Mature Stage (expected after 2026): Economies of scale will be released, and gross margin is expected to stabilize and rebound

| Evaluation Dimension | GLM-4.7 | GPT-5.2 | Remarks |

|---|---|---|---|

Artificial Analysis Index |

68 points (1st among domestic models, 6th globally) | - | 1st among global open-source models |

Code Generation (Code Arena) |

1st globally | Behind | Surpassed OpenAI for the first time |

Mathematical Reasoning (AIME24) |

91.0 points | - | Significantly outperforms Claude 4 Opus (75.7) |

Coding Test (SW E-bench) |

64.2% | - | Close to Claude 4 Sonnet (70.4%) |

In June 2025, OpenAI listed Zhipu as its

With a financial structure of

- Technological Aspect: The differentiated design of the GLM architecture (autoregressive blank filling + bidirectional context modeling + domestic chip adaptation) has built a technological moat

- Commercial Aspect: “Generalization” capabilities result in extremely low marginal costs, supporting high gross margins; the network effect of the MaaS platform accumulates momentum for long-term growth

- Strategic Aspect: Sacrificing short-term profits to gain technological leadership and market share, and achieving profitability through economies of scale in the long term

The current R&D expense ratio, which is 7-8 times revenue, is a

[1] Touzijie - “Zhipu AI, Ranked ‘Second’” (https://news.pedaily.cn/202512/558915.shtml)

[2] UniFuncs - “In-Depth Research Report on Zhipu AI: A Panoramic Analysis of Technology, Capital, and Commercialization Paths” (https://unifuncs.com/s/jDsMFzqJ)

[3] Guancha.cn - “The ‘Chinese OpenAI’ from Tsinghua Laboratory” (https://user.guancha.cn/main/content?id=1582269)

[4] Beijing AI Institute - “Understand Rotary Position Embedding (RoPE) in 10 Minutes” (https://hub.baai.ac.cn/view/29979)

[5] Chinaz.com - “2025 Global AI Large Model Recommendation List: In-Depth Comparison of GLM-4.5 vs Qwen3-235B-A22B” (https://www.sohu.com/a/919507513_114774)

[6] The Paper - “‘Chinese Version of OpenAI’ Zhipu Goes Public, the Value of China’s AI Large Models Continues to Rise” (https://m.thepaper.cn/newsDetail_forward_32343111)

[7] Sina Finance - “Beijing’s First Large Model Stock: Zhipu Races for Hong Kong IPO, Raising Over CNH 8 Billion with Annual Revenue Exceeding CNH 300 Million” (https://finance.sina.com.cn/stock/t/2025-12-20/doc-inhcnchz4454223.shtml)

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.