Comprehensive Analysis of AMD's New Generation AI Chip Competitive Position: Strategy and Prospects Against NVIDIA

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Related Stocks

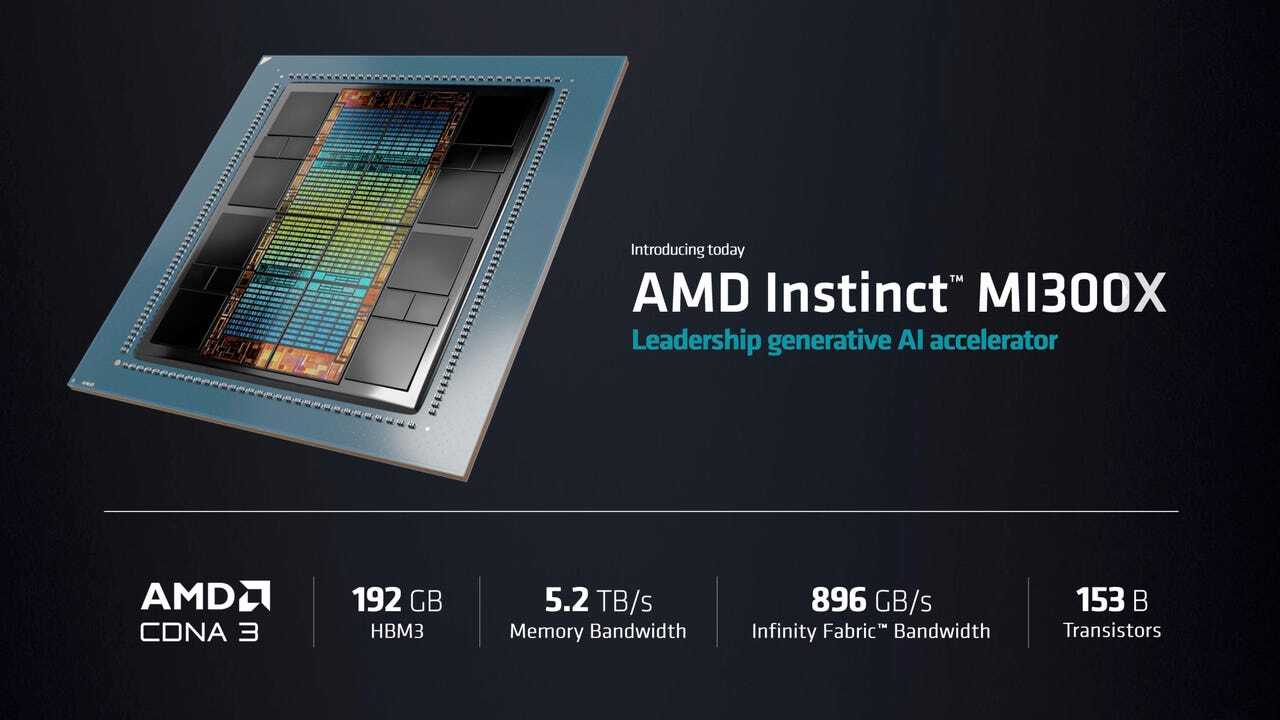

AMD’s Instinct MI300X, released at the CES 2024 exhibition, represents an important technological breakthrough in the AI accelerator market. Compared to NVIDIA H100, MI300X has achieved

- 192GB HBM3 high-bandwidth memory, which is2.4 timesthe capacity of NVIDIA H100 [1]

- 5.3 TB/s memory bandwidth, 60% higher than H100’s 3.3 TB/s [1]

- This means MI300X can accommodate larger large language model (LLM) parameters on a single chip, significantly reducing multi-chip communication overhead

- FP64 double-precision floating point: 163.4 teraflops, 2.4 times that of H100 [1]

- FP32 single-precision floating point: 163.4 teraflops (matrix and vector operations), 2.4 times that of H100 [1]

- FP16 peak performance: 1,307.4 teraflops, 32.1% higher than H100/H200’s 989.5 teraflops [2]

- Power consumption design: 750W TDP (slightly higher than H100’s 700W) [1]

According to MLPerf benchmarks and third-party evaluations:

- Llama 2 70B inference performance: A single MI300X reaches 2,530.7 tokens/second, comparable to H100’s performance [2]

- Inference efficiency advantage: With larger memory capacity, MI300X can run complete model shards on a single device, reducing cross-device communication latency [3]

- HPC workloads: In high-performance computing tasks, MI300X “not only competes with H100 but can also claim performance leadership” [4]

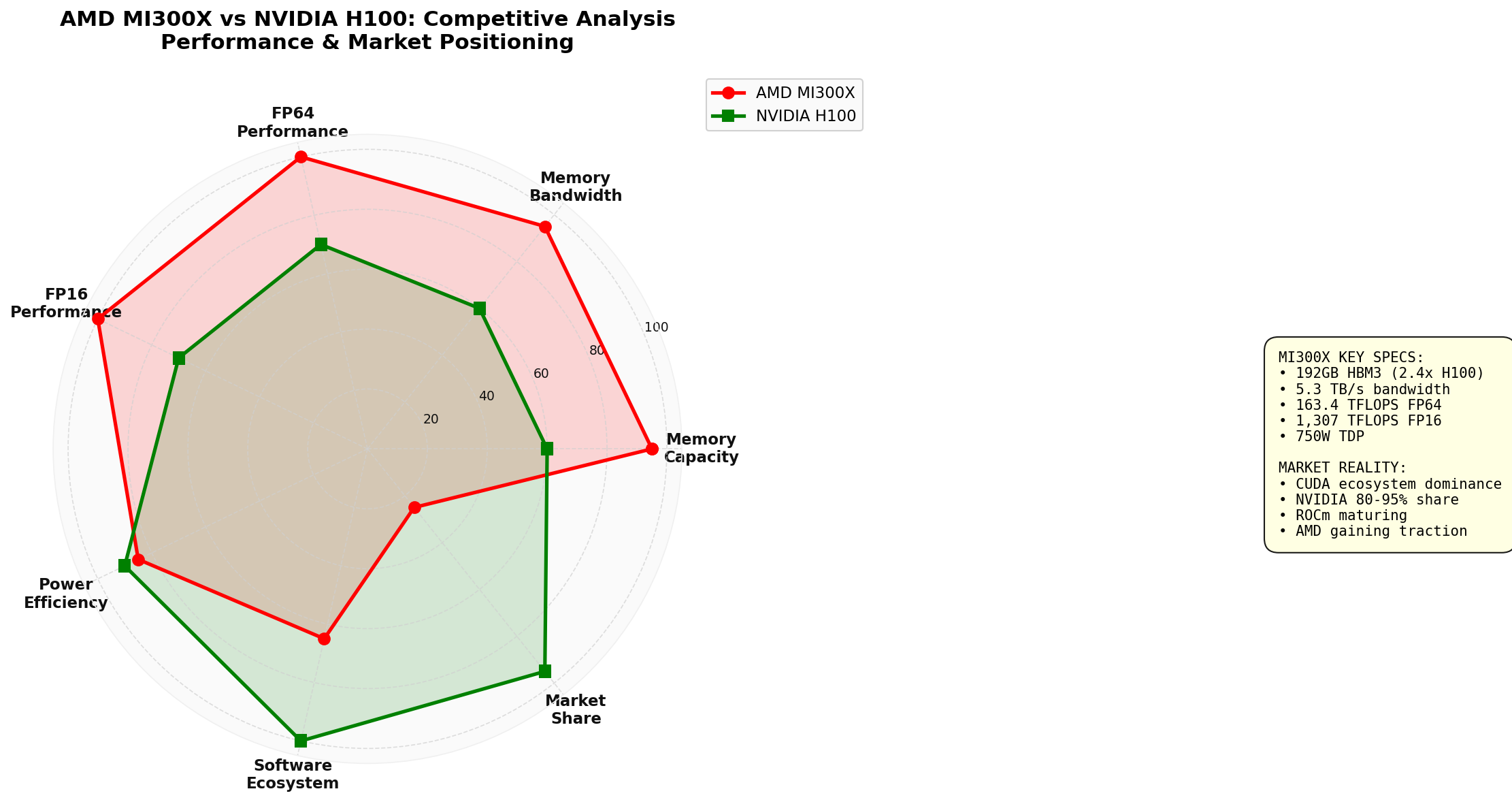

The radar chart shows: MI300X leads H100 comprehensively in hardware performance indicators (memory capacity, bandwidth, computing performance), but there is still a significant gap in software ecosystem and market share

AMD achieved

- Meta: Uses MI300X to power its 405 billion parameter Llama 3.1 model, with an reported order of about170,000 units[3]

- Microsoft: Deploys MI300X in Azure cloud services, becoming an important strategic partner [5]

- OpenAI: Reached a6-gigawatt strategic cooperation agreementwith AMD to deploy large-scale MI300X clusters [5]

- Oracle: Deploys50,000 MI300X GPUsin OCI Supercluster [5]

- MI300X shipments exceeded 327,000 unitsin 2024, with Meta accounting for about half [3]

- Seven top AI companiesare publicly deploying MI300-based systems [3]

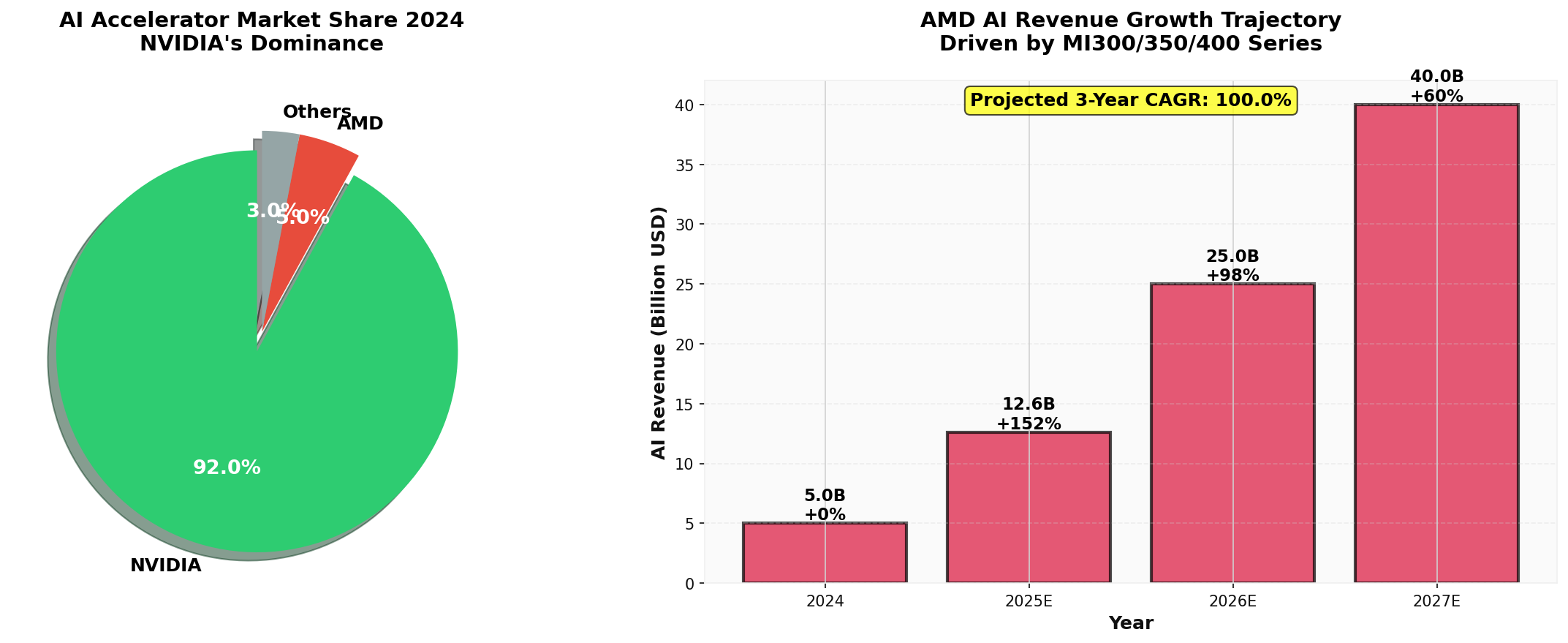

- AMD’s data center AI revenue is expected to grow from approximately $5 billionin 2024 with a clear path [3]

Despite significant progress, AMD’s market share still has a

- 2024 AI GPU market share: NVIDIA accounts for80-95%(with 92% share in data center GPUs), while AMD only has a single-digit share [6]

- Data center revenue comparison(Q3 2025):

- NVIDIA: $57.1 billion

- AMD: $4.34 billion

- The gap is about 13 times[0]

- NVIDIA:

- Market capitalization gap: NVIDIA ($4.58 trillion) vs AMD ($358.8 billion), a difference of nearly12 times[0]

Left chart: 2024 AI accelerator market share shows NVIDIA’s overwhelming advantage; Right chart: AMD’s AI revenue growth trajectory shows strong upward momentum, with an expected 3-year CAGR of 102%

NVIDIA’s real advantage lies not only in hardware but also in its

- Mature library optimization: Highly optimized deep learning framework integration and library support [7]

- Broad developer base: Millions of developers proficient in CUDA programming

- Enterprise-level support: Complete toolchain, debuggers, and performance analysis tools

- Concurrent performance advantage: In high-intensity concurrent request scenarios, the CUDA execution stack shows better scalability [7]

- Although MI300X surpasses H100 in paper parameters, in actual SaaS environments, software maturity rather than raw computing power becomes the dominant factor in performance[7]

- The ROCm platform shows performance plateaus in concurrent benchmark tests, while CUDA can continuously expand throughput [7]

AMD’s ROCm software stack, although starting late (released in 2016), is

- Open source transparency: Developers can inspect, modify, and contribute to every layer of the system [8]

- Cost advantage: AMD hardware prices are generally 15-40% lower than similar NVIDIA products, providing appeal for budget-sensitive projects [8]

- Rapid progress: ROCm version 6.1.2 has significantly improved compatibility with the PyTorch framework [2]

- Adoption by top clients (Meta, Microsoft, OpenAI) verifies ROCm’s production readiness[3,5]

- AMD continues to invest in ROCm development, with library optimization and framework integration accelerating

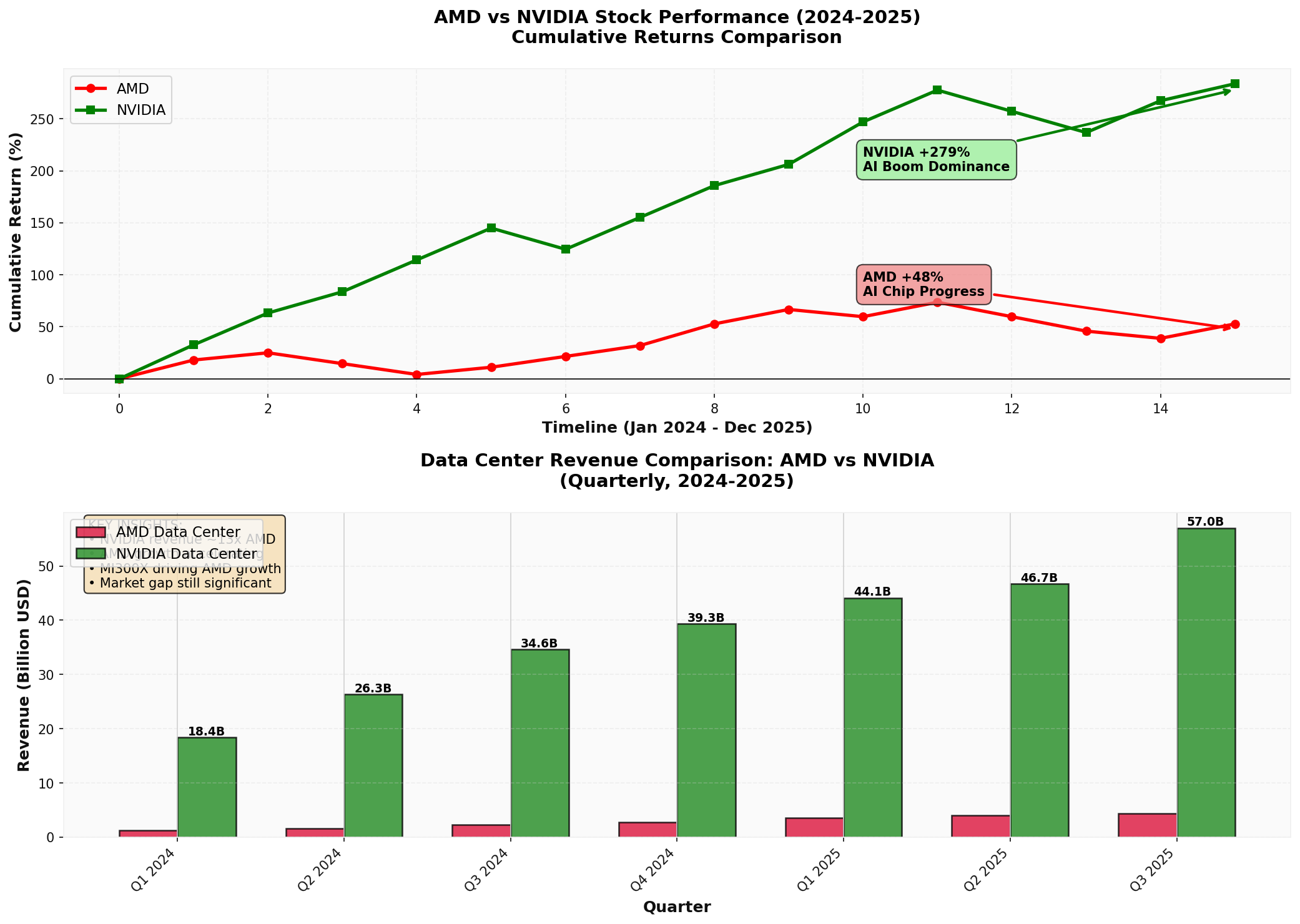

The top chart shows NVIDIA’s dominant position in the AI wave (+279%), while AMD has made progress (+48%) but lags significantly; The bottom chart reflects the huge gap in data center revenue

- NVIDIA: Rose from $49.24 to $186.50, an increase of+278.76%

- AMD: Rose from $144.28 to $214.16, an increase of+48.43%

- The market has given overwhelming recognitionto NVIDIA’s dominant position in the AI chip field

- NVIDIA P/E: 46.13x(market cap $4.58 trillion)

- AMD P/E: 108.73x(market cap $358.8 billion)

- The 12x market cap gap reflects the market’s view that NVIDIA’s position is unshakable

- AMD’s high P/E (108.73x) indicates investors’ high growth expectations

- Any evidence that shakes NVIDIA’s hegemonic narrative will reposition the valuations of the two stocks [6]

- 35x inference performance improvement(compared to MI300X) [9]

- 288GB HBM3E memory(further expanding capacity advantage) [9]

- Has received deployment commitmentsfrom Microsoft, Meta, and OpenAI [9]

- Targets NVIDIA Blackwell B200

- Expected to start contributing revenue in 2026 [3]

- 2025: Approximately$395 billion(55% YoY growth)

- 2026: Approximately$602 billion(34% YoY growth)

- 2027: Approximately$615 billion(16% YoY growth) [10,11]

- About 75% of capital expenditurewill be used for AI infrastructure

- AI-specific expenditure in 2026 is approximately $450 billion[10]

- Even if AMD gains a 10-15% market share, it means tens of billions of dollars in revenue opportunities

According to AI-2027 research institute forecasts:

- Global AI-related computing volume will grow from the current 10 million H100 equivalent unitsto100 million H100 equivalent unitsby the end of 2027

- Annual compound growth rate of 2.25x[11]

- This provides a huge market expansion space for AMD

-

CUDA ecosystem lock-in:

- High enterprise migration costs (code rewriting, personnel training, toolchain updates)

- Tendency to protect existing investments

-

Supply chain constraints:

- Tight supply of HBM3E memory

- Advanced packaging capacity bottlenecks

- Competition for TSMC CoWoS capacity

-

Customer self-developed chips:

- Google TPU, AWS Trainium, Meta’s possible custom chips

- May compress the third-party market space in the long run

AMD’s MI308 export to China is affected by

✅

- Hardware performance leadership: MI300X surpasses H100 in key indicators, proving technical feasibility

- Customer breakthroughs: Gained validation from top clients like Meta, Microsoft, and OpenAI

- Revenue growth: Data center AI revenue is rising rapidly from $5 billion in 2024

- Product roadmap: MI350/MI400 shows commitment to continuous innovation

❌

- Still low market share: Single-digit share in the NVIDIA-dominated market

- Software ecosystem gap: Although ROCm is progressing, there is still a gap compared to CUDA

- Revenue scale gap: Data center revenue is only 1/13 of NVIDIA’s

- Valuation gap: Market capitalization gap reflects the market’s recognition of NVIDIA’s moat

- AI revenue reaches $10-15 billionlevel

- Market cap may approach $500-800 billion

- Become a strong competitorin the AI chip market

- AI revenue reaches $6-10 billion

- Maintains current valuation multiples

- Become a reliable second choice

- Market is squeezed by NVIDIA and cloud service providers’ self-developed chips

- AI revenue growth slows down

- Valuation is under pressure

- Focus on ROCm ecosystem progressand developer adoption rate

- Track MI350/M400 shipment volumeand customer feedback

- Monitor data center revenue growth rateand gross margin

- Pay attention to hyperscale clientcapital expenditure direction

- Mid-2025: MI350 series release and initial deployment

- Q4 2025: Evaluate MI300X’s full-year shipment volume and revenue contribution

- 2026: MI400 series competitiveness verification

AMD’s new generation AI chip strategy has achieved

- Hardware advantages are obvious but market penetration is slow: Converting performance leadership into market share takes time

- Software ecosystem is the key battlefield: ROCm needs continuous investment to narrow the gap with CUDA

- Customer adoption is happening: Wins from Meta, Microsoft, OpenAI, etc., provide strong validation

- Huge market opportunities: Explosive growth in hyperscale cloud service providers’ capital expenditure provides sufficient space for AMD

The strategy of competing against NVIDIA is

For investors, AMD provides an investment opportunity with

[0] Jinling API Data - AMD and NVIDIA company profiles, financial data, stock price performance (2024-2025)

[1] NetworkWorld - “AMD launches Instinct AI accelerator to compete with Nvidia” (January 2024) - MI300X technical specifications and comparison data with H100

https://www.networkworld.com/article/1251844/amd-launches-instinct-ai-accelerator-to-compete-with-nvidia.html

[2] The Next Platform - “The First AI Benchmarks Pitting AMD Against Nvidia” (September 2024) - MLPerf benchmark analysis

https://www.nextplatform.com/2024/09/03/the-first-ai-benchmarks-pitting-amd-against-nvidia/

[3] Seeking Alpha - “AMD’s MI350: The AI Accelerator That Could Challenge Nvidia’s Dominance in 2026” (December 2025) - Market share, customer adoption, and product roadmap

https://seekingalpha.com/article/4856532-amds-mi350-ai-accelerator-that-could-challenge-nvidias-dominance-in-2026

[4] Tom’s Hardware - “AMD MI300X performance compared with Nvidia H100” (October 2024) - Third-party performance evaluation

https://www.tomshardware.com/pc-components/gpus/amd-mi300x-performance-compared-with-nvidia-h100

[5] MLQ.ai - “AI Chips & Accelerators Research” (2025) - Customer deployment cases and data center revenue data

https://mlq.ai/research/ai-chips/

[6] FinancialContent Markets - “NVIDIA: Powering the AI Revolution and Navigating a Trillion Dollar Future” (December 2025) - Market share statistics

https://markets.financialcontent.com/stocks/article/predictstreet-2025-12-6-nvidia-powering-the-ai-revolution-and-navigating-a-trillion-dollar-future

[7] AI Multiple Research - “GPU Software for AI: CUDA vs. ROCm in 2026” (2026) - In-depth comparison of software ecosystems

https://research.aimultiple.com/cuda-vs-rocm/

[8] Thundercompute - “ROCm vs CUDA: Which GPU Computing System Wins” (2025) - Open source advantage analysis

https://www.thundercompute.com/blog/rocm-vs-cuda-gpu-computing

[9] Christian Investing - “AMD Q2 2025: Built to Win the AI Wars” (August 2025) - MI350 specifications and customer commitments

https://christianinvesting.substack.com/p/amd-q2-2025-built-to-win-the-ai-wars

[10] CreditSights - “Technology: Hyperscaler Capex 2026 Estimates” (2025) - Cloud service provider capital expenditure forecast

https://know.creditsights.com/insights/technology-hyperscaler-capex-2026-estimates/

[11] AI-2027 - “Compute Forecast” (2025) - AI computing volume growth forecast

https://ai-2027.com/research/compute-forecast

[12] LinkedIn - “ROCm vs. CUDA: A Practical Comparison for AI Developers” (2025) - Developer perspective comparison

https://www.linkedin.com/pulse/rocm-vs-cuda-practical-comparison-ai-developers-rodney-puplampu-usbuc

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.