Compal Unveils SGX30-2 AI Server with NVIDIA HGX B300 at OCP Global Summit 2025

Unlock More Features

Login to access AI-powered analysis, deep research reports and more advanced features

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.

Related Stocks

This analysis is based on the Compal press release and product showcase at the 2025 OCP Global Summit [1], representing a significant strategic move by Compal Electronics into the high-performance AI server market. The event, occurring on October 16, 2025, coincides with critical industry challenges where AI workloads are driving unprecedented demands on data center infrastructure [1].

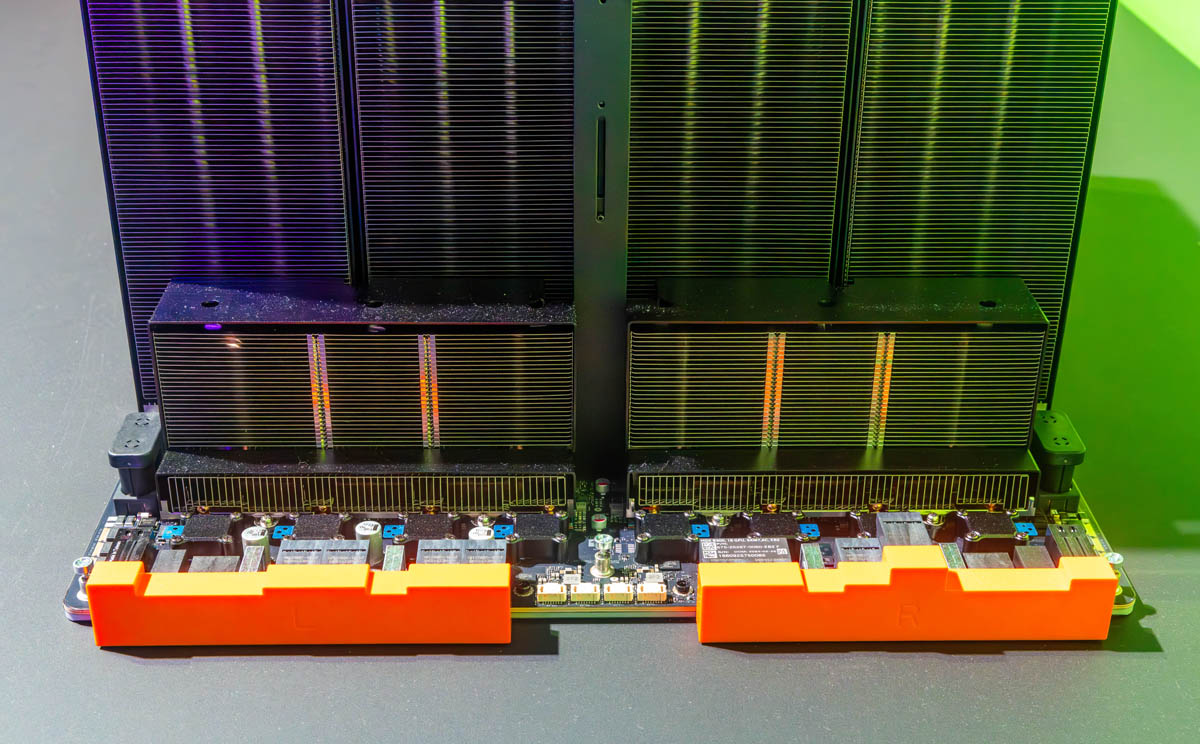

The SGX30-2 server announcement demonstrates Compal’s comprehensive approach to addressing AI infrastructure challenges through integrated solutions combining NVIDIA’s HGX B300 platform with Blackwell Ultra GPUs, CXL-based memory pooling, RDMA fabrics, and advanced liquid cooling technologies [1][2][3]. This integrated stack spans from chip-level compute to rack-level thermal management, targeting the primary constraints facing hyperscale AI deployments: compute density, memory bandwidth, and energy efficiency.

The timing of this announcement aligns with industry-wide transitions. Major consultancies project substantial increases in data center power consumption due to AI workloads, with liquid cooling market growth projected at 27.6% CAGR through 2030 [4][5][6]. Compal’s emphasis on pPUE targets below 1.1 directly addresses these emerging efficiency requirements.

- Technology Adoption Barriers: CXL fabric maturity and liquid cooling operational expertise remain challenges for enterprise adoption [1][2]

- Supply Chain Constraints: Limited availability of Blackwell Ultra GPUs and HBM3e modules could restrict production scaling [3][8]

- Facility Readiness: Data center operators require significant infrastructure upgrades for liquid cooling and higher power densities [4][5]

- Competitive Response: Established AI server vendors may accelerate their own integrated solutions, increasing competitive pressure [8][9]

- First-Mover Advantage: Early integration of CXL and liquid cooling with HGX B300 could secure design wins with hyperscalers [1][2]

- Market Growth: Liquid cooling market projected to grow from $4.9 billion in 2024 to $21.3 billion by 2030 [4]

- Energy Efficiency Premium: pPUE targets below 1.1 command premium pricing in energy-constrained markets [1][5]

- Ecosystem Leadership: Early adoption positions Compal as thought leader in AI infrastructure integration [2][3]

Compal’s SGX30-2 announcement represents a comprehensive response to AI-driven data center challenges, integrating NVIDIA’s HGX B300 platform with advanced memory and cooling technologies. The server supports eight Blackwell Ultra GPUs with CXL-based memory pooling and offers both direct-to-chip and immersion cooling options targeting pPUE below 1.1 [1][2][3].

The announcement signals Compal’s strategic expansion into higher-margin AI server integration, competing directly with established OEMs while leveraging its manufacturing scale. The integrated solution addresses critical industry constraints around compute density, memory bandwidth, and energy efficiency that are limiting AI deployment scaling [4][5][6].

Industry projections indicate accelerating demand for such integrated solutions, with liquid cooling adoption growing rapidly and data center power consumption from AI workloads increasing significantly. The success of Compal’s approach will depend on technology maturity, supply chain availability, and customer facility readiness for advanced cooling and power infrastructure [4][5][6].

The announcement reflects broader industry trends toward disaggregated memory architectures, liquid cooling adoption, and integrated AI infrastructure solutions that reduce deployment complexity for hyperscale operators [1][2][3][4].

Insights are generated using AI models and historical data for informational purposes only. They do not constitute investment advice or recommendations. Past performance is not indicative of future results.

About us: Ginlix AI is the AI Investment Copilot powered by real data, bridging advanced AI with professional financial databases to provide verifiable, truth-based answers. Please use the chat box below to ask any financial question.